Камеры для измерения глубины: Сколько типов существует и как они работают?

Модули камер глубины сейчас являются ключевой технологией в встроенных системах, робототехнике, промышленной автоматизации и автономных транспортных средствах. Они позволяют машинам "видеть" мир в трех измерениях, как это делаем мы, люди. Технологии определения глубины, включая временную модульацию (ToF), Лидар и структурированный свет, предоставляют машинам точное пространственное восприятие, что обеспечивает высокую степень интерактивности и автоматизации в различных приложениях. Эти технологии способствуют развитию таких областей, как автономные транспортные средства, навигация роботов, промышленная автоматизация и дополненная реальность. В этой статье мы подробно рассмотрим, как работают камеры глубины, различные типы технологий и их разнообразные применения в современной технологии. В наших предыдущих статьях мы ознакомили вас с ToF и другими камерами 3D-картографирования . Для получения более подробной информации обратитесь к ним.

Различные типы камер глубины и их основные принципы реализации

Прежде чем разобраться в каждом типе камеры глубины, сначала давайте поймем, что такое определение глубины.

Что такое определение глубины?

Определение глубины — это техника измерения расстояния между устройством и объектом или между двумя объектами. Это можно сделать с помощью 3D-камеры глубины, которая автоматически обнаруживает наличие любого объекта рядом с устройством и измеряет расстояние до объекта в любое время. Эта технология полезна для устройств, интегрирующих камеры глубины, или автономных мобильных приложений, которые принимают решения в реальном времени за счет измерения расстояния.

Среди технологий определения глубины, используемых сегодня, три наиболее распространенных:

1. Структурированный свет

2. Стереовидение

3. Время полета

1. Прямое время полета (dToF)

1. LiDAR

2. Косвенное время полета (iToF)

Давайте подробнее рассмотрим принципы каждой технологии измерения глубины.

СТРУКТУРИРОВАННЫЙ СВЕТ

Камеры структурированного света вычисляют глубину и контур объекта путем проецирования известного светового узора, такого как лазеры, светодиоды и т.д. (обычно в виде полос), на целевой объект и анализа искажений отраженного узора. Эта технология отличается высокой точностью и стабильностью при контролируемых условиях освещения, но обычно используется для 3D-сканирования и моделирования из-за ограниченного диапазона действия.

СТЕРЕОЗРЕНИЕ

Камеры стереозрения работают аналогично человеческому бинокулярному зрению, захватывая изображения с помощью двух камер на определенном расстоянии и используя программную обработку для обнаружения и сравнения характерных точек на двух изображениях для расчета информации о глубине. Эта технология полезна для приложений в реальном времени в различных условиях освещения, таких как промышленная автоматизация и дополненная реальность.

Камера временного полета

Время полета (ToF) — это время, которое свет затрачивает на преодоление определенного расстояния. Камеры с технологией времени полета используют этот принцип для оценки расстояния до объекта на основе времени, необходимого для того, чтобы излученный свет отразился от поверхности объекта и вернулся к датчику.

Существует три основных компонента камеры времени полета:

- Датчик ToF и модуль датчика

- Источник света

- Датчик глубины

ToF можно разделить на два типа в зависимости от метода, используемого датчиком глубины для определения расстояния: прямой метод времени полета (DToF) и непрямой метод времени полета (iToF). Рассмотрим подробнее различия между этими двумя типами.

Прямой метод времени полета (dToF)

Технология прямого времени полета (dToF) работает за счет прямого измерения расстояния путем испускания инфракрасных лазерных импульсов и измерения времени, необходимого для того, чтобы эти импульсы прошли от источника до объекта и обратно.

модули камер dToF используют специальные светочувствительные пиксели, такие как одиночные фотодиоды лавинного типа (SPAD), для обнаружения резких увеличений количества фотонов в отраженных импульсах света, что позволяет точно рассчитывать временные интервалы. Когда световой импульс отражается от объекта, SPAD обнаруживает внезапный пик фотонов. Это позволяет отслеживать интервалы между пиками фотонов и измерять время.

камеры dToF обычно имеют низкое разрешение, но их малый размер и низкая цена делают их идеальными для приложений, которым не требуется высокое разрешение и реальное время работы.

Лидар

Раз мы говорим о использовании инфракрасных лазерных импульсов для измерения расстояния, давайте поговорим о камерах LiDAR.

Камеры LiDAR (Light Detection and Ranging) используют лазерный передатчик для проекции растрового светового шаблона на сцену, которая записывается, и сканируют его туда-обратно. Расстояние измеряется путем расчета времени, необходимого датчику камеры для записи светового импульса до достижения объекта и его возвращения обратно.

Сенсоры LiDAR обычно используют две длины волн инфракрасных лазеров: 905 нанометров и 1550 нанометров. Лазеры с более короткой длиной волны реже поглощаются водой в атмосфере и лучше подходят для измерений на дальних расстояниях. В свою очередь, инфракрасные лазеры с большей длиной волны могут использоваться в приложениях, безопасных для глаз, таких как роботы, работающие рядом с людьми.

Косвенное время полета (iToF)

В отличие от прямого времени полета, камеры косвенного времени полета (iToF) вычисляют расстояние, освещая всю сцену постоянно модулируемыми лазерными импульсами и записывая сдвиг фазы в пикселях сенсора. Камеры iToF способны захватывать информацию о расстоянии для всей сцены одновременно. В отличие от dToF, iToF не измеряет напрямую временной интервал между каждым световым импульсом.

С помощью камеры iToF расстояние до всех точек сцены можно определить всего за один снимок.

| Свойство | СТРУКТУРИРОВАННЫЙ СВЕТ | СТЕРЕОЗРЕНИЕ | Лидар | dToF | iToF |

| ПРИНЦИП | Искажение проецируемого узора | Сравнение изображений с двойной камеры | Время полета отраженного света | Время полета отраженного света | Сдвиг фазы модулированного светового импульса |

| Сложность программного обеспечения | Высокий | Высокий | Низкий | Низкий | Средний |

| Расходы | Высокий | Низкий | Переменная | Низкий | Средний |

| Точность | На уровне микрометра | На уровне сантиметра | Зависящий от дальности | От миллиметра до сантиметра | От миллиметра до сантиметра |

| Область применения | Недолго | ~6 метров | Высокая масштабируемость | Масштабируемый | Масштабируемый |

| Производительность при слабом освещении | Хорошо | Слабый | Хорошо | Хорошо | Хорошо |

| Внешняя производительность | Слабый | Хорошо | Хорошо | Умеренный | Умеренный |

| Скорость сканирования | Медленный | Средний | Медленный | Быстрый | Очень быстрая |

| Компактность | Средний | Низкий | Низкий | Высокий | Средний |

| Потребление энергии | Высокий | Низкая до масштабируемой | Высокая до масштабируемой | Средний | Масштабируемый до среднего уровня |

Общие области применения камер глубины

- Автономные автомобили: Камеры глубины предоставляют автономным автомобилям необходимые возможности восприятия окружающей среды, позволяя им определять и избегать препятствий, а также выполнять точную навигацию и планирование маршрута.

- Безопасность и наблюдение: Камеры глубины используются в сфере безопасности для распознавания лиц, мониторинга толпы и обнаружения вторжений, повышая безопасность и скорость реакции.

- Дополненная реальность (AR): Технология измерения глубины используется в приложениях дополненной реальности для точной наложения виртуальных изображений на реальный мир, обеспечивая пользователям погружённый опыт.

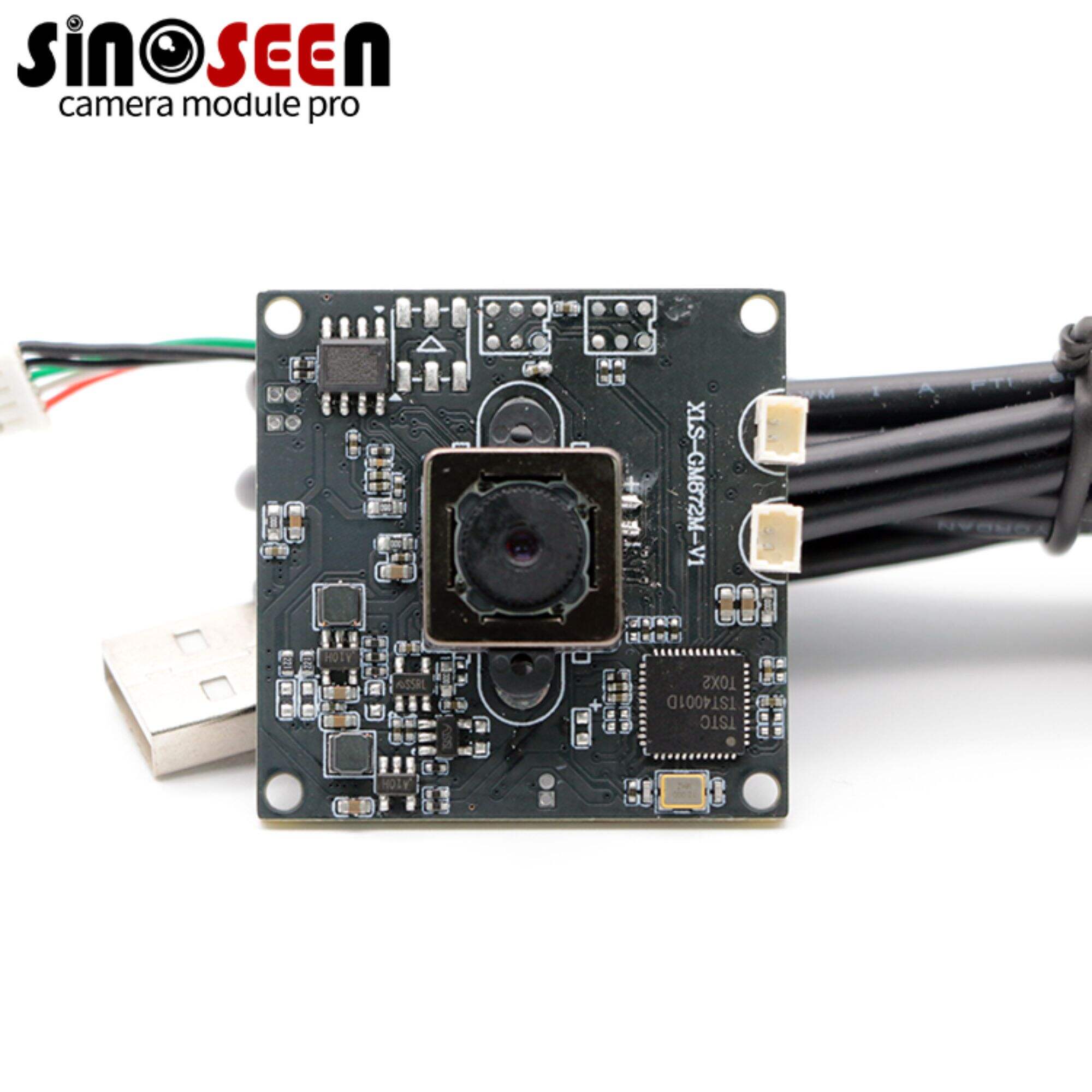

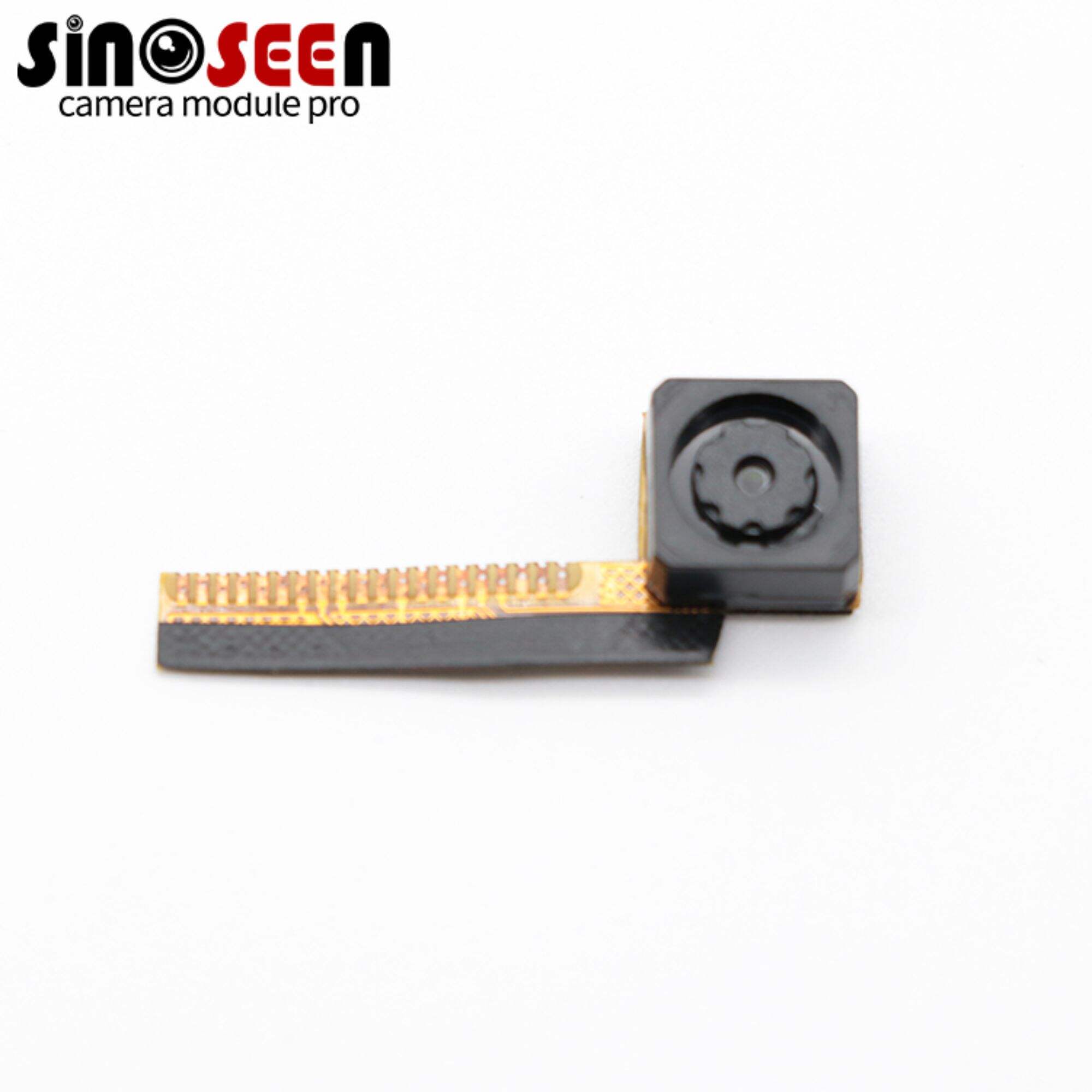

Sinoseen предоставляет вам правильную камеру для измерения глубины

Как зрелый производитель модулей камер, Sinoseen имеет обширный опыт в проектировании, разработке и производстве модулей камер под маркой OEM мы предоставляем высокоэффективные модули камер глубины ToF и делаем их совместимыми с интерфейсами, такими как USB, GMSL, MIPI и т. д. При этом поддерживаются продвинутые функции обработки изображений, включая глобальный затвор и инфракрасную съемку.

Если ваше встраиваемое приложение для компьютерного зрения требует поддержки модулей камер глубины ToF, не стесняйтесь обращаться к нам. Уверен, наша команда предложит вам удовлетворительное решение. Вы также можете посетить список наших продуктов модулей камер чтобы увидеть, есть ли модуль камеры, соответствующий вашим потребностям.

EN

EN

AR

AR

DA

DA

NL

NL

FI

FI

FR

FR

DE

DE

EL

EL

HI

HI

IT

IT

JA

JA

KO

KO

NO

NO

PL

PL

PT

PT

RO

RO

RU

RU

ES

ES

SV

SV

TL

TL

IW

IW

ID

ID

SR

SR

VI

VI

HU

HU

TH

TH

TR

TR

FA

FA

MS

MS

IS

IS

AZ

AZ

UR

UR

BN

BN

HA

HA

LO

LO

MR

MR

MN

MN

PA

PA

MY

MY

SD

SD